It is important because it ensures continuity, minimizes costly downtime, and protects against data loss from ransomware, human error, or hardware failure.

Business Data Growth and the Need for Reliable Recovery

KEY TAKEAWAYS

- Learn why data growth has become a structural risk

- Understand how recovery is no longer a backup problem

- Discover the role of proxies in data stability

- Uncover the business impact of poor recovery planning

Data is one of the most valuable assets a business has, and when the company grows, the volume of data grows too. It grows very fast across platforms, vendors, regions, and formats, mostly outpacing the expectations of the teams in charge of its management.

Well, this whole part of the modern data stack includes third-party tools, operational databases, analytics platforms, scraped datasets, and cloud storage layers, which are all rarely found in one location, making it difficult to recover.

Everything here in growing and collecting data causes no problem for businesses, but maintaining it and keeping it stable, intact, and recoverable when something goes wrong.

Let’s dive into this article and understand how business manages data, what the need is for reliable data recovery, and what happens When data fails.

Why Data Growth Has Become a Structural Risk

Data volume used to grow with headcount and infrastructure. That link is broken. Small teams now use data pipelines that rival what large enterprises ran not long ago. APIs, automation, and scraping frameworks make it possible to pull massive volumes quickly — but they also make it simpler to trigger blocks, throttling, and silent data gaps.

As volume grows, access becomes the constraint. Businesses that require large datasets cannot depend on direct requests alone. They experiment with routes, identities, and collection patterns to stay operational. This is where risk slides in: growth driven by access workarounds, without the same rules applied to storage, validation, and recovery.

The challenge is not only technical. It is organizational. Data spreads across marketing platforms, logistics systems, customer information stores, internal dashboards, and research environments. Each process pulls data differently, solves access problems locally, and leaves behind systems that are difficult to audit or restore.

High-Volume Marketing and Competitive Research

Marketing teams are usually the first to push data collection limits. Keyword tracking, SERP monitoring, ad intelligence, comparison of pricing, and content analysis all require frequent, large-scale access to public platforms.

To avoid blocks, teams rotate identities more frequently. Residential proxies are used to replicate real users. Google-specific proxies help localize search results and avoid regional bias. Mobile proxies are layered in when platforms add restrictions. Datacenter proxies still exist in lower-risk tasks where speed matters more than stealth.

The problem is not the proxy choice itself, but the sprawl. Multiple proxy types, vendors, and configurations run at the same time. When data inconsistencies appear, it becomes difficult to trace whether the issue came from the source, the proxy layer, or the scraping logic.

Product Intelligence and Market Monitoring

Outside of marketing, businesses collect data to analyze markets, not just visibility. Product availability, supplier pricing, inventory fluctuations, and competitor positioning are monitored all the time.

These systems prioritize consistency over freshness. Proxies are deliberately chosen for stability rather than churn. Static residential pools, region-locked IPs, and irregular request patterns lower detection risk. Blocks still happen, but less often and in more controlled ways.

When failures happen, recovery depends on knowing which segments were affected. Without proper logging and snapshotting, teams typically rerun entire collections, raising exposure and cost.

Operations, Compliance, and Internal Analytics

Some data collection has hardly anything to do with external platforms. Internal analytics, compliance reporting, and operational dashboards bring together data from a list of internal and partner systems.

Here, blocking is slightly less visible but equally damaging. API limits, authentication failures, and vendor outages interrupt flows. Proxies may still be responsible — particularly when accessing region-specific partner endpoints — but the recovery challenge changes to orchestration.

If one upstream feed unfortunately fails, downstream reports may still populate with partial data. Without recovery checkpoints, teams discover problems days later, when decisions have already been formed.

Research, Training, and Long-Term Data Assets

Research teams and data science groups gather data for reuse. Training models, trend analysis, and historical studies need completeness more than speed.

These pipelines usually mix scraping, licensed data, and internal sources. Proxy usage is conservative but long-lived. The actual risk lies in the transformation stages: cleaning, labeling, and aggregation. If a dataset is corrupted late in the extraction procedure, re-collection may no longer be an option.

In these specific instances, recovery is not about re-accessing sources. It is about preserving intermediate states so work can continue without starting over.

Fragmented Sources Create Hidden Dependencies

A single report can take information from five or six upstream sources: CRM exports, scraped rival pricing, location data, ad platform metrics, and internal transaction logs. When one source breaks, the output becomes questionable — sometimes silently.

Scraped data is extremely vulnerable. Websites change layouts, add rate limits, or deploy anti‑bot measures with little notice. A scraper that was functional yesterday may return partial or malformed data today. Without validation and recovery functions, errors propagate downstream and distort analysis.

Speed of Collection Outpaces Protection

Many teams concentrate on how fast data can be gathered and processed, not how it can be restored. Rotating IPs, proxies, and distributed scraping infrastructure are optimized for volume and access, not resilience. Logs may be incomplete, raw inputs overwritten, or intermediate effects discarded to save space.

When an incident happens— a corrupted dataset, a failed migration, or a provider outage — teams find out too late that there is no clean rollback point.

Recovery Is No Longer a Backup Problem

Traditional backups were created for relatively static systems. Modern data environments are dynamic. Tables transform daily. Scraped datasets refresh hourly. Cloud resources can move up and down automatically. Recovery now means more than bringing back a file from last night.

It means being able to reconstruct a reliable state of the data, even when the source that provided it has changed.

Recovery Requires Context, Not Just Copies

A raw backup without metadata is usually useless. Businesses need to know:

- Which proxy pool was utilized during collection

- Which source pages were accessed

- What changes were applied

- What filters or exclusions were active

Without that context, restoring data may produce the same error that caused the failure in the first place. Reliable recovery exposes both the data and the conditions under which it was first collected.

Scraping Pipelines Need Recovery Logic Built In

Data scraping is often viewed as an upstream activity — collect first, clean later. Recovery thinking reverses that approach. Pipelines should assume partial failure and design for it.

This includes managing raw snapshots, versioning datasets, and retaining logs that show response codes, proxy behavior, and timing anomalies. When a target site rejects a subset of requests, the recovery plan should detect gaps and refill them, not silently accept incomplete outcomes.

The Role of Proxies in Data Stability

Proxies are often discussed in terms of access: bypassing rate limits, managing geolocation, or distributing requests. Their role in recovery is less recognized but just as necessary.

Proxy Reliability Directly Affects Data Integrity

Proxies that are not stable introduce noise. Timeouts, inconsistent responses, and intermittent blocks give rise to uneven data quality. If a scraping job depends on a proxy pool with variable performance, the resulting dataset will probably be internally inconsistent.

From a recovery perspective, this matters. Re‑running a job through a different proxy configuration can produce different outcomes, even against the same source. Reliable recovery needs predictable access behavior.

Logging Proxy Performance Enables Targeted Rebuilds

Instead of re‑scraping each element after a failure, teams can isolate affected segments if proxy usage is logged correctly. Understanding which IPs or regions experienced problems allows selective recovery.

This lowers load, cost, and risk. It also shortens downtime, which is vital when data feeds operational decisions.

Designing Recovery for Continuous Data Operations

Recovery cannot be an optional component bolted onto a mature system. It has to be designed alongside developments.

Versioning Is a Recovery Multiplier

Work with datasets like code. Version them. Store diffs. Track schema changes. When something fails to work, teams should be able to roll back to a known‑good state without guessing which copy is trustworthy.

This is particularly relevant for scraped and aggregated data, where source volatility is normal. Versioning transforms instability into a manageable variable.

Test Recovery, Not Just Collection

A lot of teams test scraping success rates, proxy rotation efficiency, and throughput. Few test recovery speed and precision.

A recovery process that operates only on paper fails under pressure. Regular drills — restoring datasets, rehydrating pipelines, and validating outputs — reveal weak points before actual incidents do.

Business Impact of Poor Recovery Planning

Data loss rarely declares itself dramatically. More often, it shows up as subtle inconsistencies: numbers that no longer fit together, reports that take longer to generate, and decisions that feel less certain.

Over time, trust erodes. Teams spend more energy verifying data than using it. Growth slows not because data is inaccessible, but because it is unreliable.

Competitive Data Is Especially Fragile

Market intelligence, pricing data, and trend monitoring typically rely on scraping and third‑party sources. The loss of historical context or being unable to reconstruct past states negatively impacts competitive positioning.

Recovery guarantees continuity. It preserves the narrative of how markets moved, not simply where they are today.

Compliance and Accountability Depend on Recoverability

Regulatory environments normally expect data traceability. Being able to explain where data came from, how it was dealt with, and how it can be restored is no longer an option in many sectors.

Recovery is an element of governance, not just IT hygiene.

Building for the Next Phase of Data Growth

Data growth will remain constant. More sources, more automation, more scraping, more proxies. The systems that survive are not the ones that gather the most, but the ones that can lose data without losing control over it.

Reliable recovery is a strategic capability. It safeguards investment, supports scale, and maintains confidence when complexity grows.

Businesses that plan for recovery early avoid costly rewrites later. They treat data as a living asset — one that can be repaired, rebuilt, and trusted even as it expands.

Growth without recovery is weak. Growth with recovery is durable.

Frequently Asked Questions

Why is reliable data recovery so important for businesses?

What is the difference between RPO and RTO?

RTO is about time to recover the systems(how long can we be down), while RPO is about data loss (how much data can we afford to lose).

Can businesses recover their data without a backup?

Yes, businesses can sometimes recover data without proper backup, but it is risky, costly, and time-consuming.

Most people think hackers need advanced tools to find security gaps. Sorry to break the news, but sometimes, Google is…

If you are a part of any business, you might have attended meetings. And in case you were connected to…

SSD data recovery software can retrieve data that has been deleted, damaged, or otherwise rendered inaccessible from the SSD hard…

How can I improve the performance and grow my business? My team is already occupied with several projects, how do…

It feels very frustrating to lose all your digital data due to just one panic mistake of selecting a password…

Special Purpose Vehicles (SPVs) are a type of legally structured company. They are created by parent companies, usually with temporary…

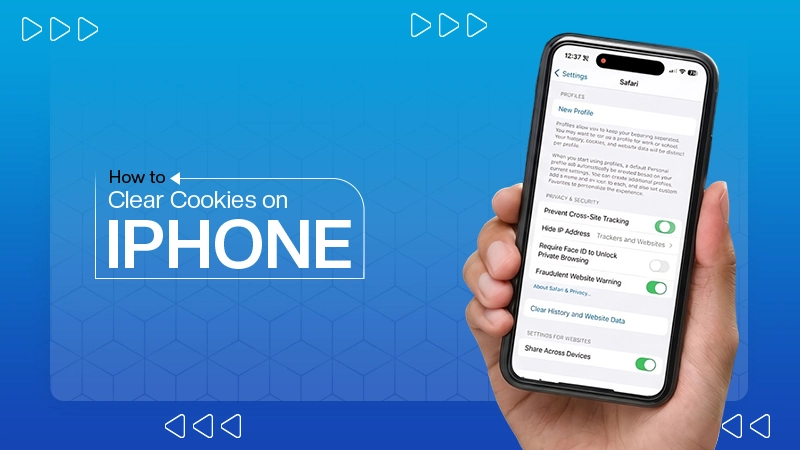

Web cache and cookies make browsing faster by saving logins, settings, and site data. But over time, they can slow…

Finding the best tools for your company feels like a big job. You need systems that actually help your team…

In the digital age, instant messaging apps have become an essential part of our daily communication. Whether for personal use,…