Along with compliance, it enables a balance of innovation and responsibility in business operations, ensuring sustainable success.

Why Business Leaders are Prioritizing AI Governance in 2025

- The Turning Point: Why 2025 Matters

- Key Drivers Pushing AI Governance Up the Agenda

- What Effective AI Governance Actually Means

- Why Governance is a Business Advantage, Not a Constraint

- Case Examples: Wins and Failures

- Building Governance Without Killing Innovation

- The Strategic Payoff

- Frequently Asked Questions

According to the latest reports from Forbes, leading business organizations are all set to invest around $1 trillion in AI initiatives in the future.

In the past few years, AI has rapidly transformed from an experimental initiative to an essential business function. Executives are striving to embrace the potential of large language models, autonomous systems, and automated decision-making using data interpretation. A crucial understanding of valuable data has emerged from the significant legal, reputational, and operational risks.

Thus, by 2025, it will be essential to prioritize strategies emphasizing AI governance. Therefore, this article explains the reasons why business leaders are now prioritizing governance. Additionally, you will get outlines of what effective governance entails and examine how to implement it in a way that fosters innovation rather than stifles it.

KEY TAKEAWAYS

- Think of 2025 as the turning point for creating a roadmap for the future.

- Check out the key drivers behind AI governance.

- Integrate innovation with governance building to get effective solutions.

The Turning Point: Why 2025 Matters

A few powerful trends have come together to make 2025 the year AI governance finally moved from theory to accountability. GenAI stopped being an insignificant add-on and found real product-market fit in marketing personalization, customer support, and internal automation.

At the same time, regulators around the world moved from broad privacy laws to detailed rules specifically targeting AI. Even the public swiftly woke up to biased data deepfakes, algorithms, and reckless data practices as headline after headline exposed the risks.

Investors and boards began identifying unmanaged AI risk as a direct threat to a company’s valuation, not a technical detail buried in engineering. That modification changed how executives think. AI is no longer just a product problem. It’s a brand liability, an enterprise risk, and a compliance issue waiting to happen.

Resultantly, tech roadmaps now sit side-by-side with governance frameworks, and publications like The Tech Leaders are releasing governance playbooks right next to product reviews. The message is clear: the cost of poor AI governance is not abstract anymore — utilizing figures, it is measurable, immediate, and damaging.

Key Drivers Pushing AI Governance Up the Agenda

Behind the statistics visible, there are the key underlying factors that push AI governance. Check them out in this section.

- Regulatory pressure and legal risk. Governments are laying out obligations for organizations that deploy AI. Compliance is no longer just about data protection; it now extends to safety testing, model transparency, and even supply-chain oversight for third-party models. Noncompliance can contribute to fines and operational restrictions.

- Operational and safety risk. AI systems that automate decisions—loan approvals, medical triage, candidate screening —can produce harmful outcomes when they fail or drift. The operational risk encompasses not only direct harm, but also the downstream costs of remediation and lost trust.

- Brand and customer trust. Consumers react quickly to remarks about biased algorithms or deceptive AI-generated content. Demonstrating governance is now as important as demonstrating innovation for many companies.

- Investor and board scrutiny. Investors frequently factor governance into risk assessments. Boards, often educated by compliance and legal teams, expect frameworks that make AI auditable, accountable, and aligned with corporate values.

- Speed of adoption. The faster AI features are deployed to products, the more likely governance gaps appear. Leaders prioritize administrative duties to keep pace safely.

What Effective AI Governance Actually Means

Good governance is not a particular policy or a checkbox. Consider this as a set of complementary practices designed to ensure AI systems are safe, fair, and reliable across their lifecycle. Core pillars include:

- Data Governance and Provenance. Figuring out where training data came from, what it contains, and how it’s processed. Provenance is highly important for audits and for responding to privacy concerns.

- Model Validation and Testing. Continuous evaluation for accuracy, robustness, bias, and adversarial vulnerabilities. This includes pre-deployment testing and periodic monitoring for model drift.

- Explainability and Transparency. Documented action paths and model rationale where feasible, so stakeholders can readily comprehend why a system made a particular suggestion or decision.

- Accountability and Roles. Clear assignment of responsibility — who signs off on deployment, who coordinates monitoring, and who responds to incidents. Human-in-the-loop checkpoints should be set up for high-risk use cases.

- Policy, Ethics, and Standards. Organizational policies aligned with legal requirements and contractual commitments, supported by training and governance committees.

- Operational Controls (Mlops + Govops). Integration of governance into CI/CD and operational frameworks so compliance scales with deployment speed.

Why Governance is a Business Advantage, Not a Constraint

Keep in mind that good governance reduces friction, not innovation. It reduces the likelihood of regulatory penalties, prevents financial mistakes that erode customer trust, and makes AI deployments more predictable. Organizations that embed governance into their product lifecycle can transition faster because they have fewer surprises and more reliable rollback and remediation processes. In short, governance converts AI from a hazardous experiment into a repeatable capability.

Real-World Governance Challenges Leaders Face

- Lack Of Common Standards. Industries are at distinctive maturity levels; what’s appropriate in healthcare may differ from retail. That makes a one-size-fits-all method ineffective.

- Skill Gaps. Many leadership teams lack technical AI literacy, making it particularly challenging to evaluate risk vs. reward. The same goes for compliance teams unfamiliar with model behavior.

- Tooling and Measurement. Measuring fairness, monitoring model drift, and creating explainability artifacts require new tooling and processes that many companies do not yet have.

- Third-Party Model Risk. Using external models or APIs means relying on another vendor’s governance — and that creates supply-chain risk.

- Balancing Speed and Oversight. Leaders must find ways to let production teams innovate while maintaining guardrails for high-risk launches.

Governance in Action: Practical Components

- Risk Tiering. Classify AI projects by expected impact (low/medium/high). High-risk projects require human oversight, stricter controls, and formal approval paths.

- Model Cards and Documentation. Maintain concise documentation for each model: purpose, data sources, performance metrics, known limitations, and monitoring plans.

- Pre-Deployment Checks and Red-Team Testing. Simulate adversarial scenarios and boundary legal proceedings before going live.

- Continuous Monitoring and Drift Detection. Track performance and fairness metric function in production; trigger retraining or rollback when deterioration is detected.

- Incident Response and Audit Trails. Have playbooks and immutable logs so you can investigate the final result and demonstrate compliance to regulators or auditors.

Case Examples: Wins and Failures

Organizations that implemented governance primeval report fewer surprises and faster regulatory responses. For example, firms that used model cards and continuous monitoring learned about biased outputs before a public emergency and were able to remediate within weeks.

Conversely, organizations that rushed GenAI features into customer-facing products without adequate testing experienced public backlash and had to pause services, causing revenue and reputational loss.

Building Governance Without Killing Innovation

Leaders should adopt a pragmatic approach. The method is simple, and starts with basic risk mapping and ends with iterating:

- Start With Risk Mapping. Identify the highest-impact use cases and implement governance there first.

- Use Lightweight, Scalable Controls. Automate monitoring and use policy templates to help minimize manual workload.

- Invest In Literacy. Train leaders and teams on AI basics and governance responsibilities.

- Leverage External Frameworks. Use widely adopted guidance and compliance checklists as a baseline, adapting them to the company context.

- Iterate. Governance should evolve with technology — it’s not a specific project but a continuous program.

The Strategic Payoff

In 2025, AI governance has emerged as a differentiator. Organizations that demonstrate responsible AI practices reduce regulatory risk, win customer trust, and position themselves favorably with investors and partners. Governance is no longer a box to check after deployment — it’s a design principle for the AI-enabled enterprise.

AI offers transformative potential, but only whenever deployed with care. Prioritizing governance is how business administrators turn a disruptive technology into a sustainable advantage, securing innovation is matched by accountability and trust.

Frequently Asked Questions

Why do business leaders need AI governance in 2025?

What are the main business risks associated with ungoverned AI?

The main risks associated are reputational damage, regulatory penalties, operational failures, and ethical concerns.

Does AI governance help a business achieve a competitive advantage?

Yes, effective AI governance acts as a strategic enabler for innovation, providing it a competitive edge.

Is AI governance an IT-only responsibility?

Not really. In fact, it requires a cross-functional approach in compliance, HR, and risk management.

Most people think hackers need advanced tools to find security gaps. Sorry to break the news, but sometimes, Google is…

If you are a part of any business, you might have attended meetings. And in case you were connected to…

SSD data recovery software can retrieve data that has been deleted, damaged, or otherwise rendered inaccessible from the SSD hard…

How can I improve the performance and grow my business? My team is already occupied with several projects, how do…

It feels very frustrating to lose all your digital data due to just one panic mistake of selecting a password…

Special Purpose Vehicles (SPVs) are a type of legally structured company. They are created by parent companies, usually with temporary…

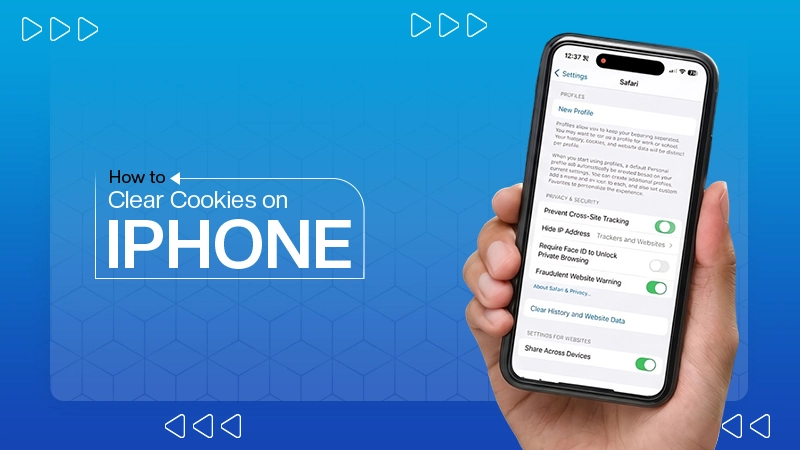

Web cache and cookies make browsing faster by saving logins, settings, and site data. But over time, they can slow…

Finding the best tools for your company feels like a big job. You need systems that actually help your team…

In the digital age, instant messaging apps have become an essential part of our daily communication. Whether for personal use,…